CPU Virtualization in Operating Systems: A Powerful Illusion Explained (2025 Guide)

CPU virtualization is one of the most brilliant tricks in the operating system’s playbook — a masterstroke that allows your device to juggle dozens of tasks, making you believe it has superpowers. But how does one physical processor handle video streaming, antivirus scanning, code compiling, and background syncing all at the same time?

“Virtualization makes one processor behave like many. It’s the OS’s sleight of hand — and it’s genius.”

In this article, we break down CPU virtualization in a human-friendly way. Whether you’re a student, techie, or future hacker, you’ll walk away understanding not just the “what” but the “how” and “why” of CPU virtualization.

Let’s begin!

Table of Contents

ToggleWhat is CPU Virtualization?

At its core, CPU virtualization is the operating system’s way of creating the illusion that every program has its own dedicated processor. This is crucial because, in reality, most computers—even those with multiple cores—have far fewer processors than running applications. The operating system steps in as a master illusionist, using clever scheduling and time-sharing techniques to distribute processing time across all active programs.

This illusion is so seamless that users are unaware that their favorite applications are not running truly concurrently. While multitasking appears smooth, the truth is that each application is taking turns on the processor in lightning-fast intervals—so fast that it feels like everything is running at the same time.

Behind the scenes, your operating system keeps a list of all processes waiting to run and decides who gets to use the CPU next. It ensures no application monopolizes the processor while others sit idle. And if a task becomes unresponsive or crashes, the OS can recover or kill the process without affecting the entire system.

Why Do Operating Systems Use CPU Virtualization?

The reasons behind CPU virtualization are deeply rooted in efficiency, usability, and scalability. Let’s walk through each of them with a narrative lens:

Without virtualization, a single-core processor would only be able to run one task at a time. This would be disastrous for modern computing. You wouldn’t be able to listen to music while browsing the web. You couldn’t update your system while working on a document. Worse, if one application froze, the whole system might become unresponsive.

To combat this, operating systems create a system of fair scheduling. They rapidly switch between tasks, sharing time slices so each process gets attention without overwhelming the CPU. This ensures that your experience remains fluid and responsive, even when you’re juggling multiple tasks.

Virtualization also isolates processes, meaning that if one misbehaves, it doesn’t bring down the whole system. It allows for stable multi-user environments, which is crucial in servers and shared systems. And perhaps most significantly, CPU virtualization makes cloud computing and virtual machines possible—letting a single server host dozens or even hundreds of virtual computers, all believing they have exclusive access to the processor.

How CPU Virtualization Works

The inner workings of CPU virtualization are complex but fascinating. Let’s paint a mental picture of the mechanisms that make this magic happen.

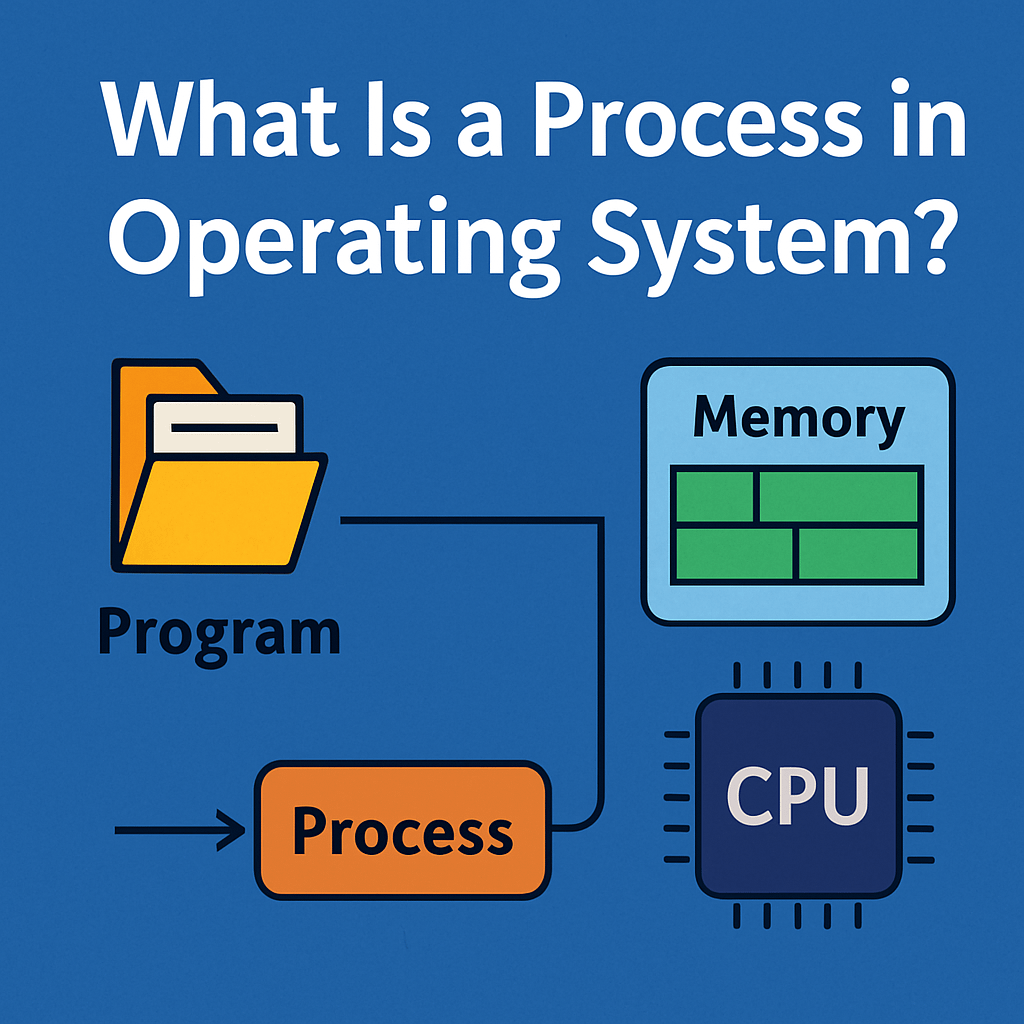

When you launch an application, the operating system creates a process—an independent unit of execution. The OS maintains a queue of all these active processes in a data structure known as the process table. Each process has its own state, memory address, and context.

Now, imagine the CPU as a tiny stage, and each process as an actor. The operating system is the director, carefully giving each actor a turn on stage. When one finishes its line (or needs a break), the director swaps it out for the next. This moment of transition is known as a context switch—a process where the OS stores the current state of one process and loads the state of another.

Modern CPUs help accelerate this with built-in virtualization extensions like Intel VT-x or AMD-V, reducing the performance hit of these switches and enabling the efficient running of full virtual machines.

To schedule which process runs when, operating systems use scheduling algorithms. These can range from:

-

Round Robin, where each process gets an equal time slice in rotation,

-

Priority Scheduling, where more critical tasks get scheduled first, or

-

First-Come, First-Served, which runs processes in the order they arrive.

These algorithms are invisible to users, but they form the beating heart of CPU virtualization—deciding in real time who gets CPU access and for how long.

A Dialogue: Demystifying CPU Virtualization

Let’s shift gears for a moment. Instead of dry theory, imagine a classroom conversation between a student and a systems engineer.

This analogy simplifies what’s otherwise a very technical concept, helping us see that behind the scenes, operating systems are conducting a beautiful performance of task juggling and time slicing.

Real-World Applications of CPU Virtualization

CPU virtualization isn’t just an academic concept—it powers the modern digital world. It’s used in everything from the phone in your pocket to the massive cloud data centers that serve billions.

In virtual machines, CPU virtualization allows a single physical computer to run multiple operating systems simultaneously. Tools like VMware or VirtualBox rely on the OS and hardware support to allocate virtual CPUs to each virtual machine, creating fully functional and isolated environments.

In cloud platforms like AWS, Google Cloud, and Azure, virtualization enables thousands of customers to share the same physical hardware securely and efficiently. Without virtualization, cloud computing as we know it would be impossible.

Even your smartphone depends on CPU virtualization. Mobile operating systems like Android and iOS isolate background processes, ensuring that your device remains smooth and secure.

Game engines, web browsers, server infrastructure, and containerized environments all rely heavily on the principles of CPU virtualization to stay responsive, secure, and scalable.

Challenges and Limitations

Despite its elegance, CPU virtualization isn’t without drawbacks. There’s always a cost to abstraction, and this cost comes in the form of overhead and latency.

Every time the OS performs a context switch, it uses up CPU cycles. These transitions, though fast, aren’t free. In environments with hundreds or thousands of processes, the overhead can become significant.

There’s also the issue of latency. If too many tasks compete for CPU time, some processes may be delayed, causing slowdowns or stuttering. And while modern hardware has features to support virtualization, not all CPUs are created equal—older hardware may struggle with advanced virtual environments.

Furthermore, security becomes critical. A poorly designed hypervisor (the software that manages virtual machines) can open doors to serious vulnerabilities, potentially exposing multiple systems to attack.

Conclusion

CPU virtualization is a modern miracle—an invisible layer of logic and scheduling that lets your computer appear far more powerful than it really is. By giving each application the illusion of its own processor, it transforms a single-core machine into a multitasking powerhouse and turns physical hardware into a flexible, scalable platform.

In this post, we’ve explored how CPU virtualization:

-

Creates the illusion of multiple CPUs

-

Enables multitasking, process isolation, and resource sharing

-

Drives cloud infrastructure and virtual environments

-

Balances performance with complexity and trade-offs

Understanding CPU virtualization isn’t just useful for developers or engineers—it’s foundational for anyone serious about how modern systems really work.

Read More: